The state of scrape

The diffusion quality gap, recent adventures in consent, and why ethics should be a no-brainer for leaders

On the next substack over, aptly surnamed content protection ace and fellow AI hype debunker Pascal Hetzscholdt pits state-of-the-art LLMs into various controversial crossroads between AI and IP. Most recently at time of writing, he made the comically misaligned duo of ChatGPT-4o & Dall-E3 perform some impressive logical gymnastics by presenting variations on trademarked characters while promising not to:

Even as stupid simple jailbreaks go, this one went beyond. File under “own goals”.

Such examples smash the common marketing-led misconception of prompting as an act of creation ex nihilo rather than one of retrieving remixes from a Very Large Content Repository.

My previous post argued that framing commissioning as creation in turn frames the main political economy issue of generative AI as automation vs labor instead of appropriation vs licensing.

Let us now dip a toe in the quality gap between present image models sourced from the actual commons, vs the ones sourced through terra scale misappropriation from a world wide web held as if it were the commons.

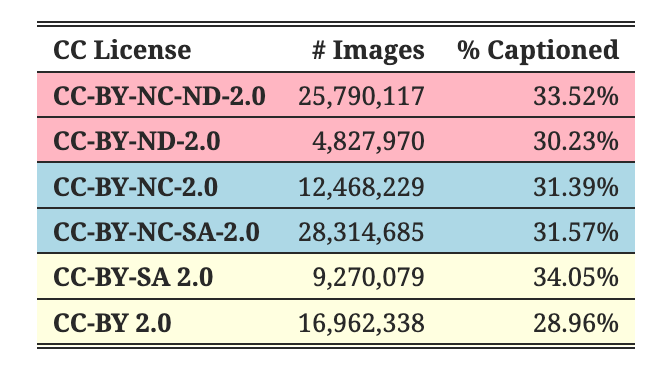

CommonCanvas: an ethical-ish frontier

The research team behind the CommonCanvas set of diffusion models set out with a bold ambition: to match Stable Diffusion 2 image generation quality using only 3% of their training data — the total cache of images available under a somewhat permissive Creative Commons license. At 30 million images declared free for commercial use (caveat below), and 70 million free for non-commercial use, their selected datasets match the order of opt-outs gathered at the training of SD2.1 (~80 million). Sounds impossible, right?

Still, the results are none too shabby:

Unsurprisingly though, famous characters turn out hilariously bad:

This is of course a far cry from the piracy-driven state-of-the-art. There is no beating Promptbase + Midjourney for Disney bootlegs.

Famous characters is one of many areas admitted as weak by the authors. But reaching parity even in some areas with 3% of the dataset size should give some pause as to quality vs quantity — and highlight just how much of a junk filtering challenge it is to work with the Laion datasets.

But don’t take my words for it: by all means take CommonCanvas for a spin!

Unstable attribution

To the caveat, then: rights-aware readers of the CommonCanvas paper will spot a glaring emission in the dataset footnotes:

All of the above licenses include “by.” That is, they assert the creator’s fundamental moral right to attribution — something diffusion models, as well as LLMs, are famously unable to deliver. By design.

And here is where the obliterated social contract of the internet keeps gyrating in its shallow grave: creators post under Creative Commons license not out of charity — that would be Public Domain / CC0 waivers — but in order to trade their display and distribution rights for recognition. CC-BY is a quid pro quo: “by all means use this commercially, just give me credit”.

A marketing gambit. Not a bucket of KY Jelly on a bear rug in front of an open fire.

So we’re back at square one: nobody signed up for this.

I would love to see a straight de minimis or fair use argument for this, but they are conspicuously absent from debate. Instead we get anthropomorphisation (“learns like people”) or tech obfuscation (“stored as math”) or greater good (“cures cancer”) or race-to-the-bottom (“what if China?”). Which makes generative AI still come off as a bet by techno-capital against fundamental rights to privacy, integrity, trademark and property, more than anything else.

Meanwhile, in degenerative AI land, one popular image generator for running models locally shows these depressing stats:

Some 7~8 million downloads of Stable Diffusion versions that contain not only hundreds of millions of copyright works (that would be all 13 million downloads above), but more alarmingly troves of porn including CSAM material (the since-recalled 1.X versions) to be generated at will.

Consent in crisis

This is the title of a recent paper by Cohere for AI on the state of data provenance and licensing. As New York Times and others report, it holds several discoveries on the state of scrape.

From their abstract:

We observe a proliferation of AI specific clauses to limit use, acute differences in restrictions on AI developers, as well as general inconsistencies between websites’ expressed intentions in their Terms of Service and their robots.txt. We diagnose these as symptoms of ineffective web protocols, not designed to cope with the widespread re-purposing of the internet for AI. (…) a full 45% of C4 is now restricted. If respected or enforced, these restrictions are rapidly biasing the diversity, freshness, and scaling laws for general-purpose AI systems. We hope to illustrate the emerging crisis in data consent, foreclosing much of the open web, not only for commercial AI, but non-commercial AI and academic purposes.

Or put more cheekily by yours truly:

AI: ”We took all your stuff. You may opt out now. From the next version.”

Publishers: ”f**k you pay us. Read the ToS!”

AI: ”What’s that? CAN’T HEAR YOU OVER THIS MASSIVE SCRAPER”

Publishers: ”Read the robots.txt sign then!!”

Google: ”Huh. Well. Shame if something happened to your search ranking”

Perplexity: What sign lel

Greybot army: Welp you didn’t put up one for each of THESE bots.

Blackbot army: I’m a real boy! Peeple-peeple nom nom nom

Brash scrapists have thoroughly tinted the pool — and continue to do so — spoiling the fun for everyone. The trend lines in the paper clearly show the slow death of the open internet, as well as a glaring consent gap between what content-driven online business now hastily added to their terms, and are technically able to reserve against. And ToS vs robots.txt aside, captchas are no match for motivated attackers.

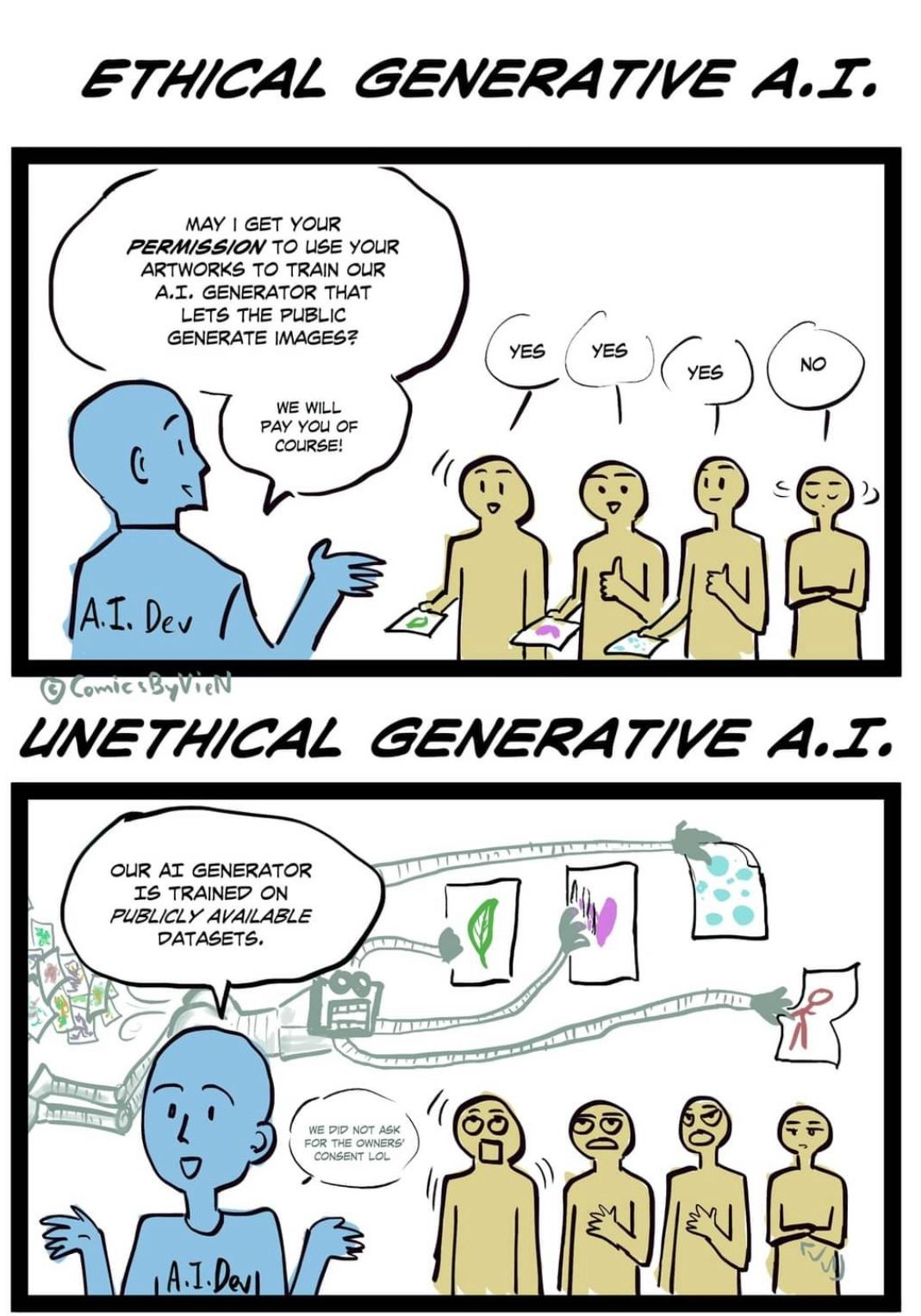

Ecce homo

So what is happening here? My bet is always with the cartoonists to capture it best. Malay penslinger VulpieNinja knocked this one out of the park in my humble opinion:

We either cut the “data source” in on the generative AI action — and respect their consent to what ends their property is being put to use — or we don’t.

Speaking of which: why not buy Vulpie a coffee! I already did.

A rapid maturation of data licensing markets is inevitable, as the value of content remixers becomes more apparent, licensors team up and regulatory pressures mount.

As the present degenerative AI blackbot scraping and slop flooding creates a zero trust environment and a dying internet, machine readable fingerprinting, content provenance, licensing information and content scrambling will become standard for online assets.

Until then, as the Consent in Crisis paper also concludes, it’s pretty much resist or die by legal means.

And more and more do.

All in on #noAI

To end on a more positive note, Dark Horse Comics, LLC, third largest US publisher of manga and graphic novels and home to some 350+ entertainment properties such as Hellboy, Sin City, Emily The Strange and Usagi Yojimbo recently announced that they go all in on #noAI, stating on Twitter:

Dark Horse Comics was originally founded to establish an ideal publishing atmosphere for creative professionals, and maintains this focus on supporting independent creators to this day. As such, Dark Horse does not support the use of AI-generated material in the works that we publish. Our contracts include language stating that the creator agrees that the work will not consist of any material generated by computer Artifical Intelligence programs. Dark Horse is committed to supporting human creative professionals with our business.

This follows not long after creator-centric arts platform Cara reached a million users, and video platform Vimeo put forth a thoughtful and widely lauded AI position:

We are taking a definitive stance contrary to many other community websites: Vimeo will not allow generative AI models to be trained using videos hosted on our platform without your explicit consent, even if you use our free offerings. In addition, we prohibit unauthorized content scraping (by model companies) and continue to implement security protocols designed to protect user-generated content.

It’s a sad state of affairs when all a CEO has to do for roaring ovation is promise not to steal from his customers. Although to be fair, they also employ best-in-class anti-scrape to back up their words.

As long as Adobe and other #degenerativeai leaders keep breaking trust and pushing loyal customers away by racing to the bottom of “if you paid us exorbitant fees over decades, you’re still the product” and as long as journalists, writers, coders and platform users increasingly find themselves rug-pulled and sold down the river by employers and former licensees, these examples show:

Ethical tech leaders can capture a windfall of loyal refugee users by just standing up for basic decency.

Hat tip to Matthew Small for pointing me to CommonCanvas!