Top ten lies about AI art, debunked

A best-of ahead of Karla Ortiz' testimony to the Judiciary.

July 12th marks the first time an artist takes the witness stand in the US Senate Committee on the Judiciary on the topic of Artificial Intelligence and Intellectual Property. Concept Artist Karla Ortiz is also one of the plaintiffs in the class action lawsuit against Stability.ai, Midjourney and DeviantArt for illicit use of their art as training data, as well as poster girl for image-cloaking software Glaze.

She will face an uphill battle, as no doubt the committee will have heard their fair share of disingenuous framings, misleading tech explainers and bright techno-futures planted by a cavalcade of tech CEOs and investors who preceded her on the stand.

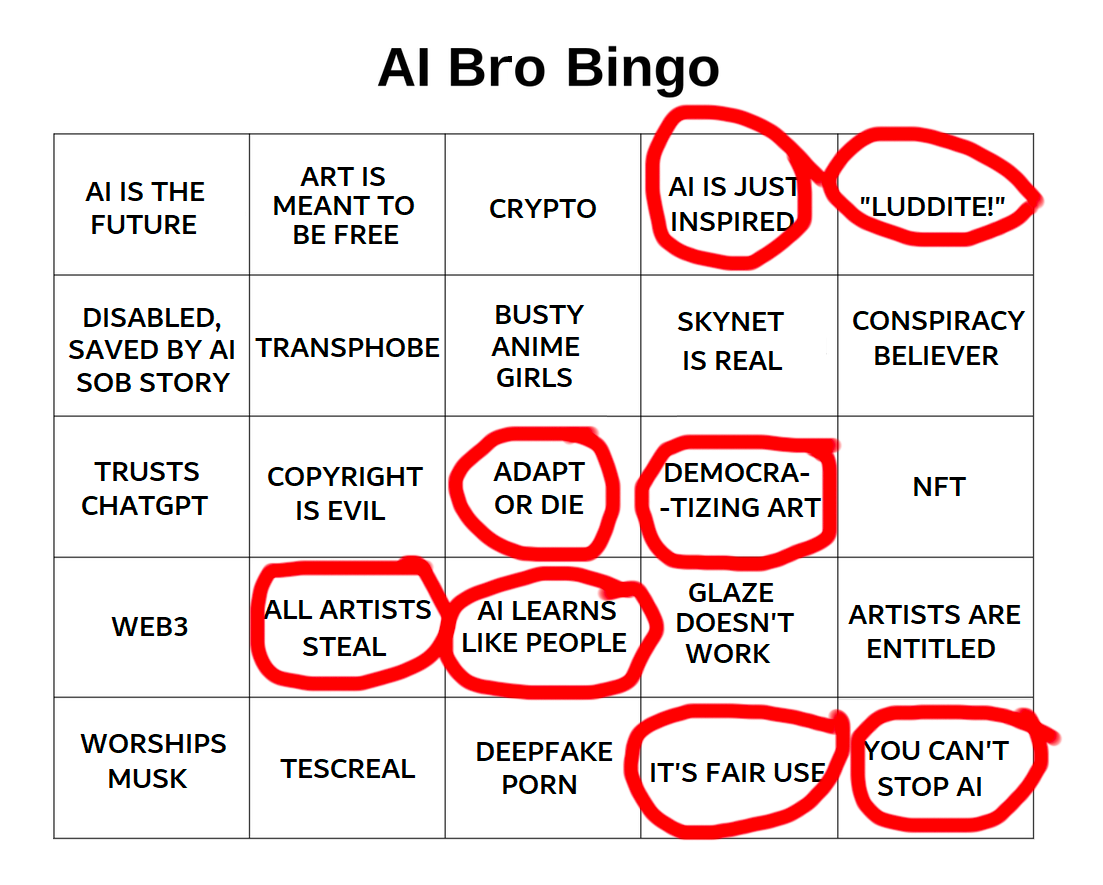

Please indulge this seasoned veteran from online creator’s rights debate as I call out and dismantle some of the incessantly recurring horse manure artists have to wade through on a daily basis when asserting their fundamental rights.

Service providers, tech investors, developers, scrapers and their army of useful idiot prompters who enjoy the free ride and the quick bucks all use these. It is by no means a comprehensive list. But it’s a start.

The cat is out of the bag

“Luddite”

Amplifying human ability

Democratizing creativity

Prompting is art

Learns like humans

It’s not copying

It’s just a tool

Tech is fast, law is slow

Adapt or die!

1. The cat is out of the bag

See also train has left the station, ship has sailed, genie is out of the bottle, resistance is futile, game over, etc

The figure of thought here is that technological development is inevitable and unstoppable. And while it is true that technology can’t be uninvented, and that all technology instantly and deeply affects how we think and act, there also is no such thing as unregulated technology. Tech found in violation of existing law and fundamental rights, or otherwise net negative to society, eventually gets pulled off the market and/or pushed underground.

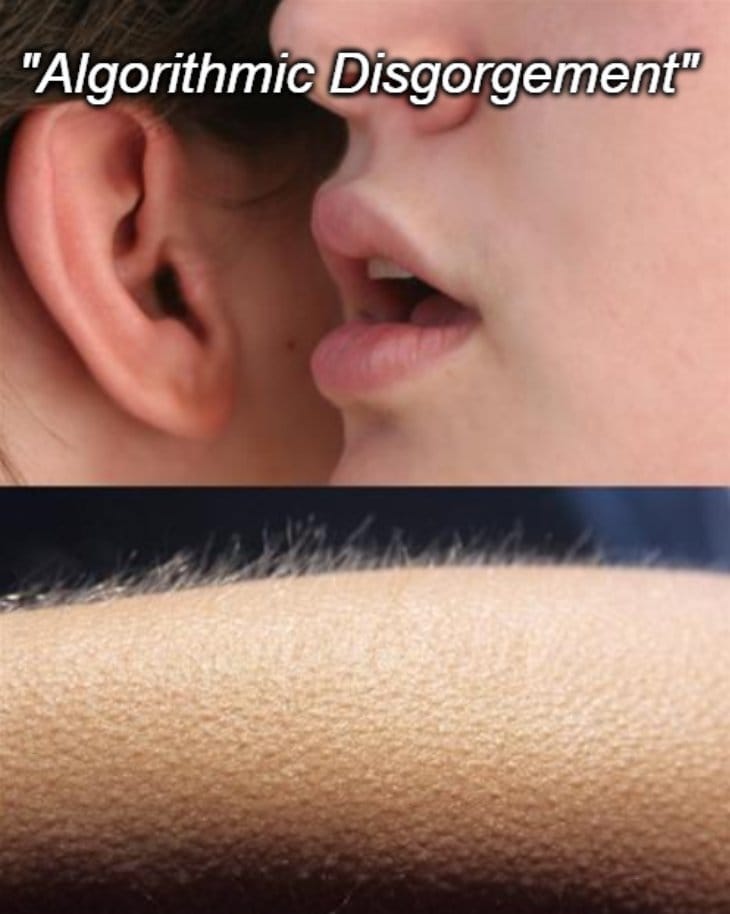

If algorithms can’t unlearn, that is not your problem, but their problem. There’s even a word for what happens if they can’t comply:

See also:

The rise, fall and brand re-use of Napster (Wikipedia)

“The FTC’s new enforcement weapon spells death for algorithms” (Protocol)

“Well, your cat kept shitting in everyone’s kitchen, so we decided to put it down.”

2. "Luddite"

Legitimate critique of new technology and its (mis)uses is labeled as being backwards and fearful. Most often this is a method of strawmanning critical positions as anti-tech, and a convenient way of silencing voices that stand up for universal fundamental rights to property, consent, credit and compensation.

This misrepresents the luddites, and is often a sign of misapprehending technology as universally positive, or values neutral.

What the Luddites really fought against (Smithsonian Magazine)

“The luddites fought for your rights, and so do I.”

”I’m not anti tech, I’m pro property rights.”

3. Amplifying human ability

A favorite expression of best-selling tech investor Reid Hoffman, with three questionable assumptions baked in:

Assumption: value “created” by an AI service is somehow separate from the value stolen in order to provide the service through practices like pirating copyrighted material, scouring your private messages and raiding shop windows for raw materials – and the value destroyed by flooding every sales venue and media outlet with low-effort automated content, costing the makers of the original works both visibility, sales and job opportunities.

What amplifies in one end disempowers in the other, at the same time as it adds a technology obligation that wasn’t there before. Value is not “created” as much as transferred, and energy and resource use is added, to the primary benefit of – tech companies.

Assumption: amplifying human ability is inherently good, or at least values neutral. Which flies in the face of accelerated spam and scams, sludge flooding, deepfake harassment and propaganda, programmatic ad frauds, etc. (DuckDuckGo:ing examples left as an exercise for the reader)

Assumption: human ability somehow improves with lack of practice, when all historic evidence and common sense points in the opposite direction.

One man’s amplification is another’s disempowerment,

and the tech companies laugh all the way to the bank.

4. Democratizing creativity

The idea here is, remixing unlicensed copyrighted works from creative professionals to give away to everyone else is not so much an act of misappropriation as one of democratization. Which, in a remarkable coincidence, is the exact wording that one of the largest piracy hubs and supplier of AI training data also uses as their slogan:

The “public good” of everyone getting free stuff is here somehow held to invalidate the owner’s objections to everyone getting their stuff for free.

Democratizing results of course has nothing to do with democratizing creativity. That’s just a lazy shortcut – see “amplification” above.

“Good artists borrow, great artists steal.

– Then how come yours does both, yet manages to be neither?”

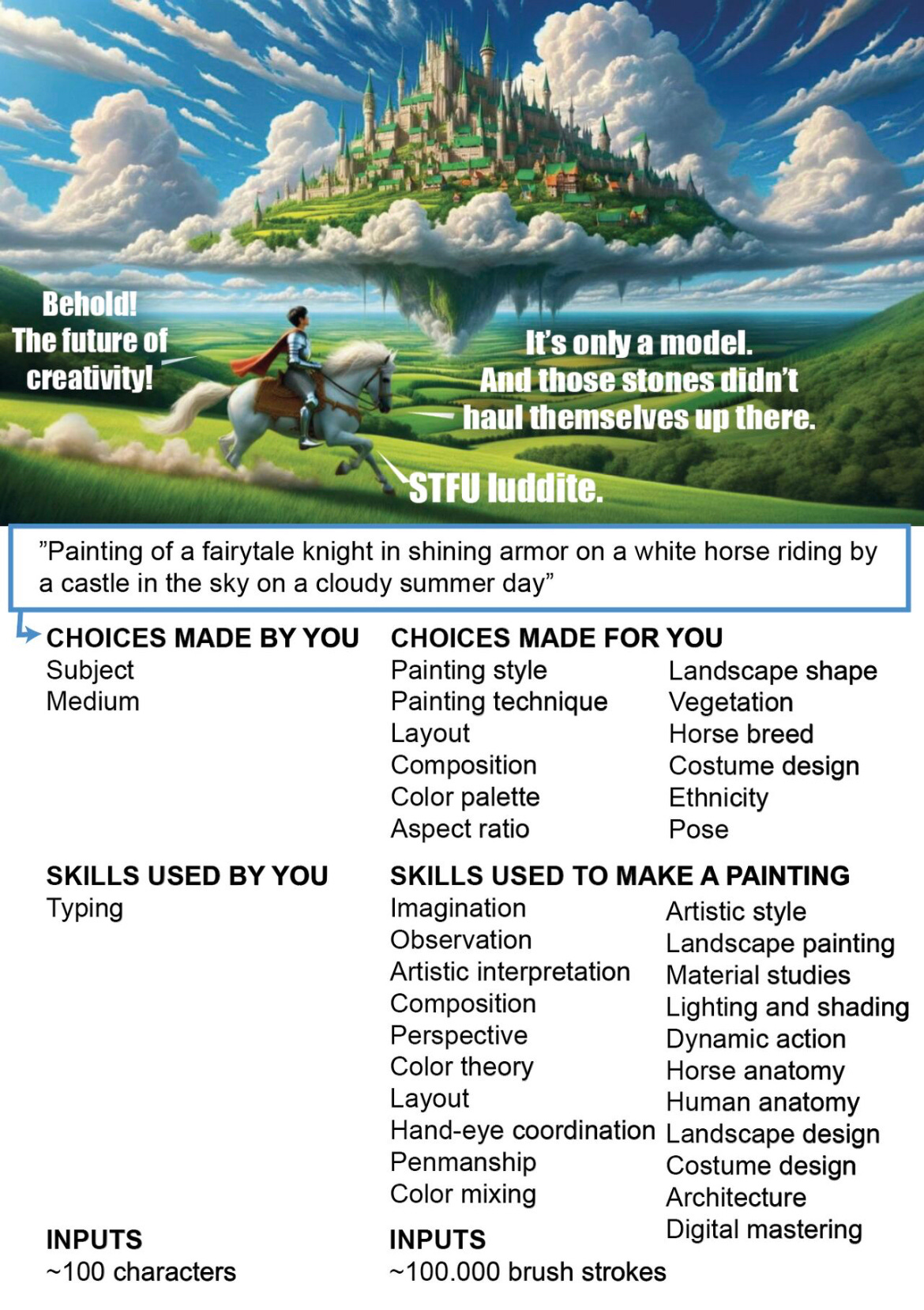

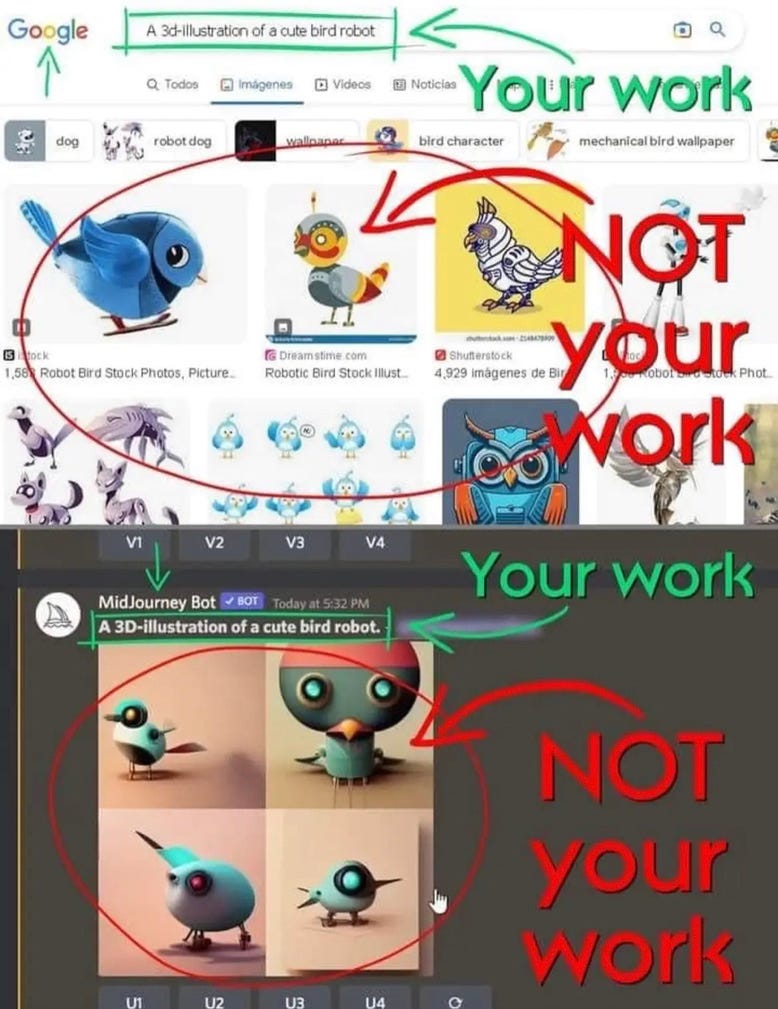

5. Prompting is art

The idea here is that users somehow contribute creatively in a substantial way.

Prompting is retrieval of images from a statistical model of underlying images. The prompt is converted to a coordinate. Any random text string generates a coordinate:

Prompting as a creative activity is one of iterative discovery and retrieval – not one of skilled and controlled execution – hence not a way to produce copyrightable work.

A million people would have gotten the same image from the same prompt, if the services didn’t add noise.

To be fair, there are any number of hybrid processes that involve more creative control. It’s a sliding scale.

But the vast majority of prompting is minimum viable effort, be that for shits and giggles or personal inspiration (that few mind) or monetization (that infringed parties do mind).

And the practice and the skills you build is not in making art, as much as commissioning art. If even that.

Ideas (choice of subject matter, general direction, expressed as prompts) don’t merit claims to rights over expression (images retrieved from a model, based on other people’s skills and aesthetic choices).

Didn’t before. Doesn’t now. Won’t ever.

(It can still be a fun pastime and an inroad to learning how to actually make images.)

It doesn’t matter how long you spent searching the woods.

Finding a pretty stick does not make you an artist.

6. Learns like humans

The idea here is that what happens inside of a machine would somehow be equivalent to what happens inside of and between human beings. This manages to fundamentally misunderstand both machines, humans, AND learning, so is just not worth anything other than dismissal and ridicule.

There is some VERY CLEVER statistical analysis of pairs of text tokens and RGB matrices in n-dimensional space going on.

Which is VERY FAR removed from the process of learning art. Human perception interprets wider ranges of color, and depth, and cross-references seeing with the other senses, and subconsciously filters it through personal experience and bias. Learning art is a social, multi-generational, cultural, embodied, embedded, reciprocal, dynamic, and above all subjective process that engages body, mind, soul and spirit.

What they usually mean is, we also copy. Anyone who thinks even that is somehow easy, or coldly calculated, or 100% about “stealing moves” hasn’t really tried.

Phenomenal bullshit artistry like this deserves its own post eventually, but let me just briefly translate this Senate Judiciary testimony by Ben Brooks of Stability:

Machine “learning” is a kindergarten level analogy,

like a camera “seeing” or an engine “running”.

Human learning is reciprocal, embodied, embedded.

The other is a large excel sheet.

There is no similarity whatsoever in any legal sense, nor any other sense that matters.

7. It's not copying

This convoluted chain of magical thinking goes something like:

the model could have existed (or in the future, will) without input images.

the quality of output images has nothing to do with the quality of input images.

neither a statistical model of input images, nor the outputs from that model, are derivative works of those input images.

making a model of copyrighted images whose purpose is producing marketplace substitutes of those images is somehow “fair use” and not vicarious infringement.

Lossy storage is also storage.

Imperfect copies are also copies.

Copyright isn’t about copies, but exclusive commercial exploitation rights.

“Fair use” is only an affirmative defense.

The four fair use factors: commercial intent, considerable portion, competing use, corrosive to the market of the original.

8. It’s just a tool

I’ll just leave this here, as Axbom said it better:

Xerox machines and torrent sites are tools, too.

9. Tech is fast, law is slow

Corollary to “move fast and break things”.

Law famously evolves slowly, but that is not a bug, but a feature of stable, inclusive, democratic process.

Principles of intellectual property law haven’t changed in a century. Those principles were stable enough to survive the advent of radio, TV, internet, home computing, CD burning etc, as they in turn rest on fundamental, universal principles of right to life, liberty and property.

As an example, the only two words actually changed in legal text after the entire Napster debacle were to clarify that copies need to be “lawfully obtained.” (One might wonder why obeying the law needs to be spelled out in the law, but here we are.)

Also, digital online services are not exempt from compliance with any number of existing laws for copyright, privacy and integrity just because labeled “AI”.

Irresponsible service providers and dishonest users see golden opportunity in this gap where jurisprudence has yet to catch up with principles. They ignore moral rights to credit and consent, hurry to enrich themselves before compensation models are settled, and — to the main point of this article — do intense public relations work to confound public debate and delay legislative process.

“Just because you got away with it,

doesn’t mean it’s moral, legal nor ethical.”

10. Adapt or die!

AI is often claimed to be just another tech shift to get used to, like when photo or CGI came around.

When photography was new, users no doubt claimed it “painted just like humans.” But no legal system grants works of photography the same scope and duration of protection as original art – nor do they allow unlicensed reproduction of existing works through photography – as it was recognized that:

Photography, on average, required less skill and effort and produces less inherent originality, and thus merits less protection (shorter terms).

Photography had the potential to cannibalize other art, thus needed legal limitations of protection. And yes, that goes for other art forms, too.

No technology prior to image generators was wholly dependent on using other people’s unlicensed intellectual property to function. The algorithms are empty, like canvases, film stock, xerox machines, blank CDs or fresh memory sticks. The models are what hold any value at all.

Those models aren’t dependent on unlicensed intellectual property by technical necessity, but by calculated business decision.

They could have based them on public domain material. But they didn’t, as it just wouldn’t be any good.

They could have licensed that material. But that would have never been profitable.

And they probably realized no professional would have agreed to have their works weaponized to undercut themselves.

So they elected to steal wholesale. Both from living professionals and a creative amateur public – which incidentally has just as strong property rights to their creative work.

And they push hard to brand content as technology, and both as some techno-magical demigod. “A.I.” sure sounds sexier than “pirated internet concentrate to rent”.

It all starts with ignoring the moral right to consent to the use of creative works, which is as fundamental as it is universal.

There is a research exception from prior consent for AI scraping. Which is NOT the same as a blanket fair use exception to build commercial products, nor to recklessly open-source products to be used for deepfake harassment and propaganda and producing any vile content in a non-consenting artist’s style.

No means no.

And there are 1.4 billion registered no’s, and counting. Even so, all of those images are still included in “ethical and legal” products for sale.

Until that stops, pirates profit and creators bleed.

Every hour of every day.

“I’ve democratized your art!

– F**k you, pay me.”

Please forward this to whoever might find it encouraging or helpful.

What are some lies and annoying talking points you want debunked? Leave suggestions in comments.

![May be an image of 1 person, money and text that says 'EASY MONEY 12:51 Easy] Get Rich Selling AI Art [100% Legal & Dylan Burrows 1.6K views 3 days ago' May be an image of 1 person, money and text that says 'EASY MONEY 12:51 Easy] Get Rich Selling AI Art [100% Legal & Dylan Burrows 1.6K views 3 days ago'](https://substackcdn.com/image/fetch/$s_!ptYR!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fdde8d76d-2d6f-409b-b909-ff13d3167db0_1028x906.jpeg)

It is fascinating. Stability especially are delusional. They sit with a straight face in Senate and try to convince them that products are people.

Thanks for your kind words! Do spread it around.

And as for China -- the funny thing is, AI bros will hammer on about the need for deregulation to beat that boogeyman. Meanwhile, they regulated AI wisely, with mandatory watermarks, algorithm registration and informed prior consent for using someone’s likeness as an AI input. It’s not their elections and stock markets that will implode from reality collapse...