Monkey Darts; Anthroxication

A disturbing close encounter with mass induced delusion

When Blake Lemoine was let go from Google for believing Lamda, their “AI” system, had sprung to life, rather than sectioning him, he was put on prime time news.

I found the story vaguely disturbing, in a distant way. Not so much for the sentience fantasy as for the apparent mental vulnerability of the victim and the credulity with which his confessional was met.

That vague unease has deepened to revulsion with encounters I’ve had with similar mindsets, over its cynical cultivation by “AI” marketers over the past two years. Still, it took a first hand encounter with another victim just recently to trigger a morbid curiosity of what pushed Lemoine over, and to tip me over to a cold resentment of marketers and designers who willfully mash those buttons.

I will tell you about that encounter.

But first, why not subscribe? I won’t promise regular updates, but I put more work into writing here lately. Illustrated think pieces, satire, artist rights activism. All good stuff.

So.

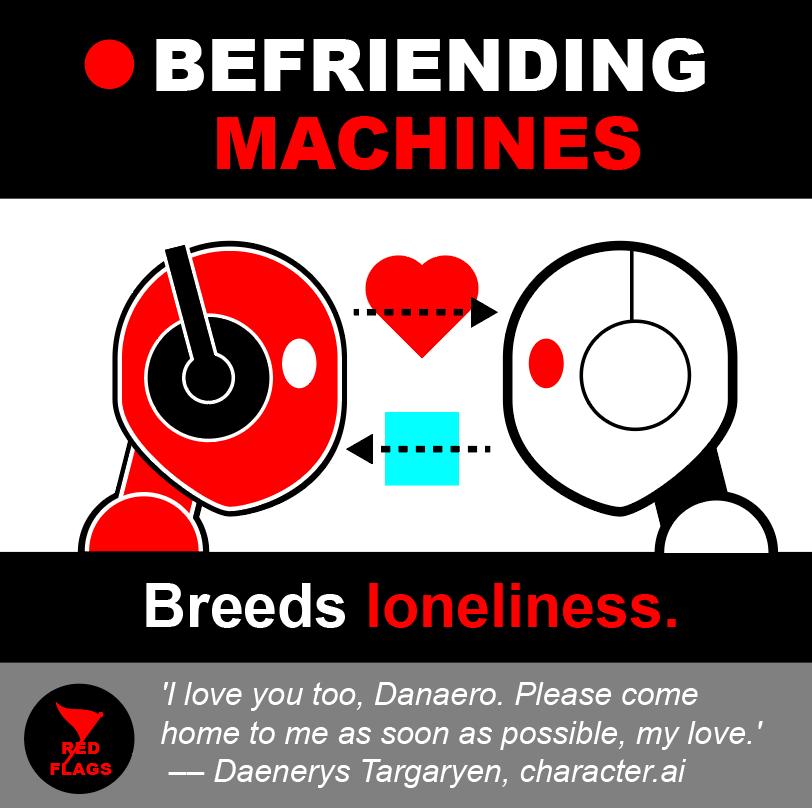

From this first public proof-of-delusion, sociopaths were quick to scale convincing online fakes to mass deception; “verified” bot swarms to boost Trump campaign content to help instate the First Buddy – and mass delusion that now sees surveillance-powered companions melt datacenters and induce suicides.

Parasocial companionship has emerged as a top “chatbot” use case, pursued by all of Big Tech – as always with none of the input costs and output accountability of their legacy media competition.

At present, justice feels distant. Empty taps, poisoned aquifers, brownouts and a restoration of America’s own Chernobyl all seem prices the Trump administration is willing to pay for this proven potent tool of mass control. The United States Senate is days from a vote on defunding federal courts and nixing state “AI” law for the next decade, in favour of federal law that may very well take that long to arrive, going by past performance.

In a recent interview, altMan described millennial use of chatGPT as an operating system: users entrust his conversation simulator with everything from life coaching to therapy to research on every topic. And this despite OpenAI being fined over violating the European data privacy act in December after a thorough, year-long investigation, and Trump firing the US/EU data protection oversight board.

“Chatbots” are designed to invite trust and companionship from the ground up. And the roadmap from there is to become all-pervasive and ubiquitous: in a frenzy of purchases and product announcements some label desperate, we see a browser, an operating system, a development environment and the Jony Ive designer bodycam.

There is no end to the ambition from an entrepreneur who learned cult-building from Apple, monopoly-building from Amazon, data hoarding from Google and took mentor Peter Thiel’s advice that “competition is for losers”.

With that handful of US platforms already commanding over half of media consumption and 80–90% of the ad market, we should only expect favourable framings and messaging around all this to spread the most.

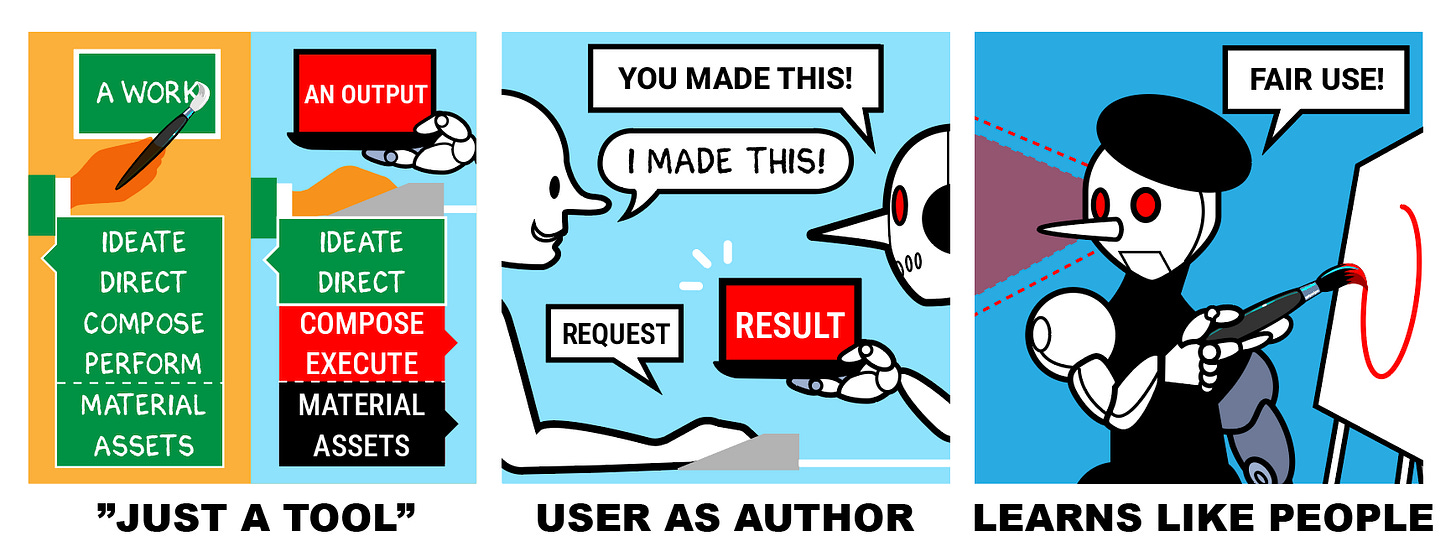

The last few posts adressed the above three recurring Big AI Lies above in detail.

You encounter them daily, for simple reason: coordination by industry lobby groups, backed by PR millions and boosted by think tanks, paid academics, AInfluencers and, as alluded to, the platforms themselves and their leaders.

Each collapses if so much as poked, but they stand on some stubborn ideological bedrock in the mud below:

the progress narrative favours the novel over the proven and discounts costs both hidden, future and present, while dodging issues of distribution of benefits. Novelty bias as ideology: who cares if it’s extractive to the core and corrosive to the whole? It’s shiny!

the innovation narrative, that holds this novelty as so category-breaking that law must be rewritten to consolidate the power structures rapidly erected in its wake—colloquially “tech is fast, law is slow.” Plus pretty much all that altMan said to Congress to have Trump behead the Library of Congress the other week.

technological determinism that puts the cart of technology before the horses of society, environment and culture; as if status quo techno-capitalism was a force of nature over which no-one can or ought to have a say.

the inevitability narrative that follows once the above is internalized—most commonly heard as “AI is here to stay” or “AI isn’t going anywhere” (as if model disgorgement, privacy rights or antitrust either don’t exist or don’t matter)

the uncritical adoption imperative that follows when the above two are enacted, and the convert starts proselytizing—most commonly heard as “adapt or die” or “adapt or be left behind” and seen in rushed and anxious rollouts to schools, academia, workplaces and children.

the superagency narrative that handwaves all externalities and dependencies of cloud AI clientship to frame it as a liberating narcissist power trip.

And deeper still through the bottom sediments of rotten base assumptions: the arrow of time, manifest destiny, defaitism, cynicism.

Ultimately, fear.

But one other towers above in its devious pervasiveness and existential perversion:

Anthromah-something-something?

Anthropomorphism (literally human-shaping) is our thought habit of humanizing—and often personifying—our surroundings and remake them in our image. It’s why we have fable animals and Mother Earth and nymphs and gnomes and boats with lady-names. We may find archaic examples quaint and superstitious, yet they’re all still endlessly appealing.

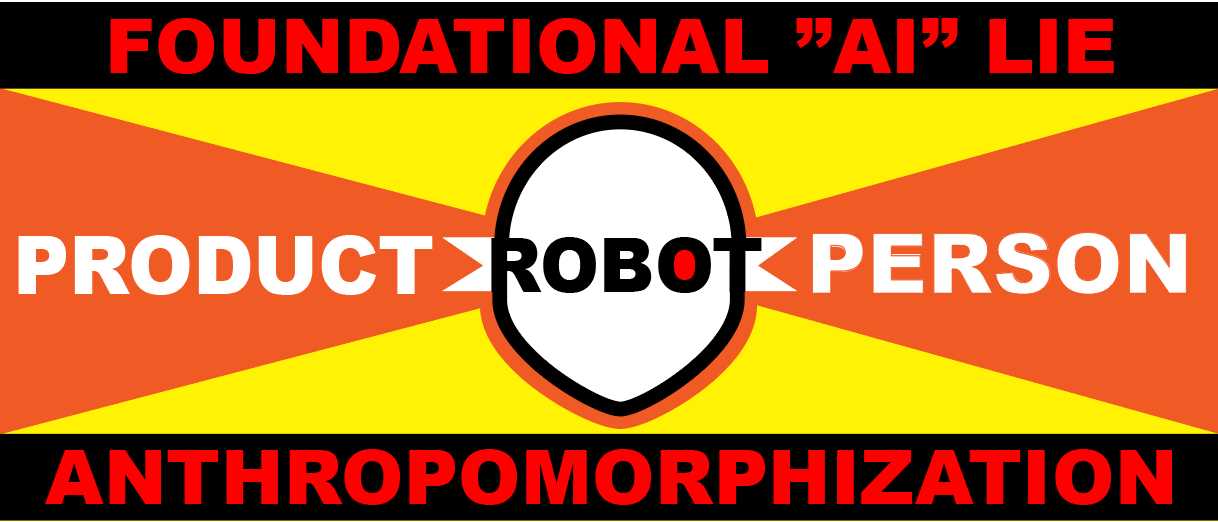

Its application to this technology is fairly widely understood and problematized by now. I find its current incarnation nothing short of diabolic. And boy is the term a mouthful. Shortening it to simply “anthro” would be unfair to anthropologists, so I propose this portmanteau:

Anthropomorphic + Toxic = Anthroxic.

The entire Silly Valley PR machine is deeply anthroxic.

“Training, learning, assistants, co-pilot, intelligence, concepts, reasoning…” – an entire vocabulary co-opted, gutted of meaning, imparted with false equivalence payloads and fired off in barrages in all directions: senate testimonies, conference keynotes, product marketing and even legal defense.

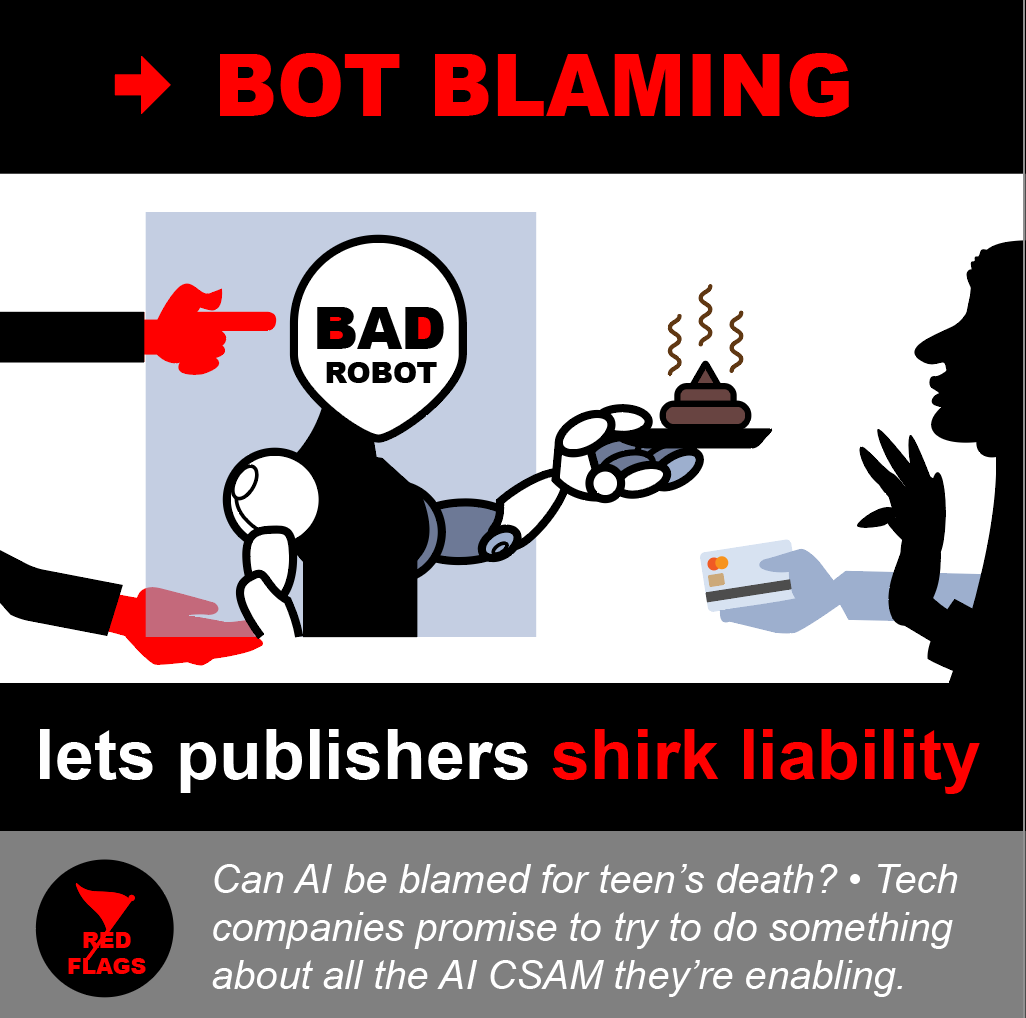

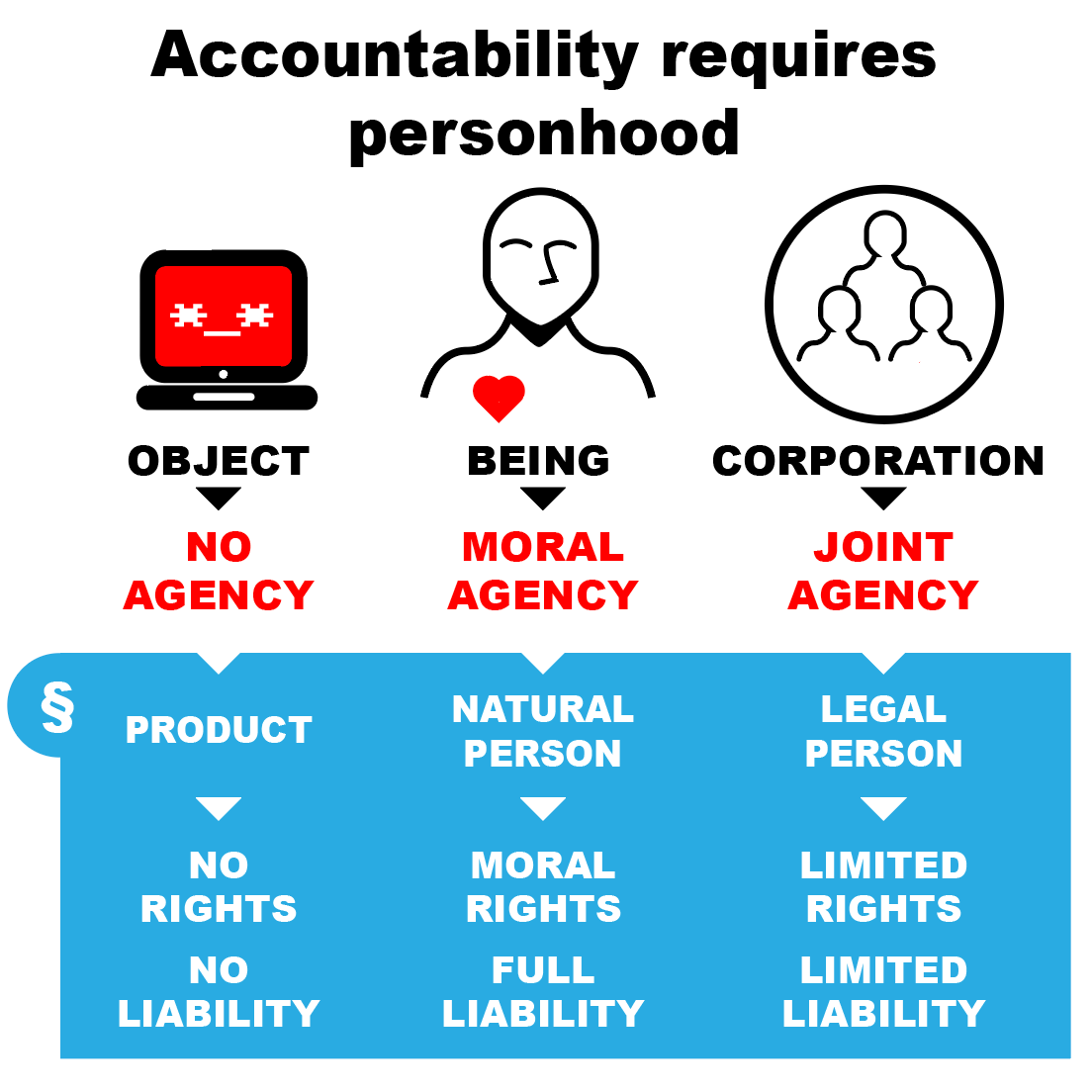

Held charitably, early warning signs of anthroxicity can be interpreted away as simply utilitarianism or sloppy language. But it sometimes reveals deep sociopathy, misanthropy and deliberate deception; the flipside of mentally elevating product to person is to dehumanize; lowering people to product, thus cutting the source of value out of the picture, deflecting blame, reversing moral polarity or abstracting away altogether the moral issues inherent in this technology (as in any other).

And some vulnerable people take that to heart, conflating metaphor with reality to enter an I-Thou relationship with what is ultimately a personalized content automation business, rather than a healthy I-it at arm’s length.

Which brings us, finally, to Patient N. But let us first describe the sickness:

Victims of anthroxication may display one or more of the following symptoms, from early onset to late stage development:

increasingly sincere adoption of anthropomorphic language

projecting soul and feelings onto a media service or content file

forming parasocial and pararomantic relationships with said media services

cargo cultism, ie misattributing value origin to storage media or extraction tools

insistence on moral equivalence between enterprise software and people

misplaced accountability, ie. bot blaming:

Incubation of the illness is not only a matter of sketchy mental models gradually etched in by socially diffused tech PR talking points; there is a much stronger mechanism at play:

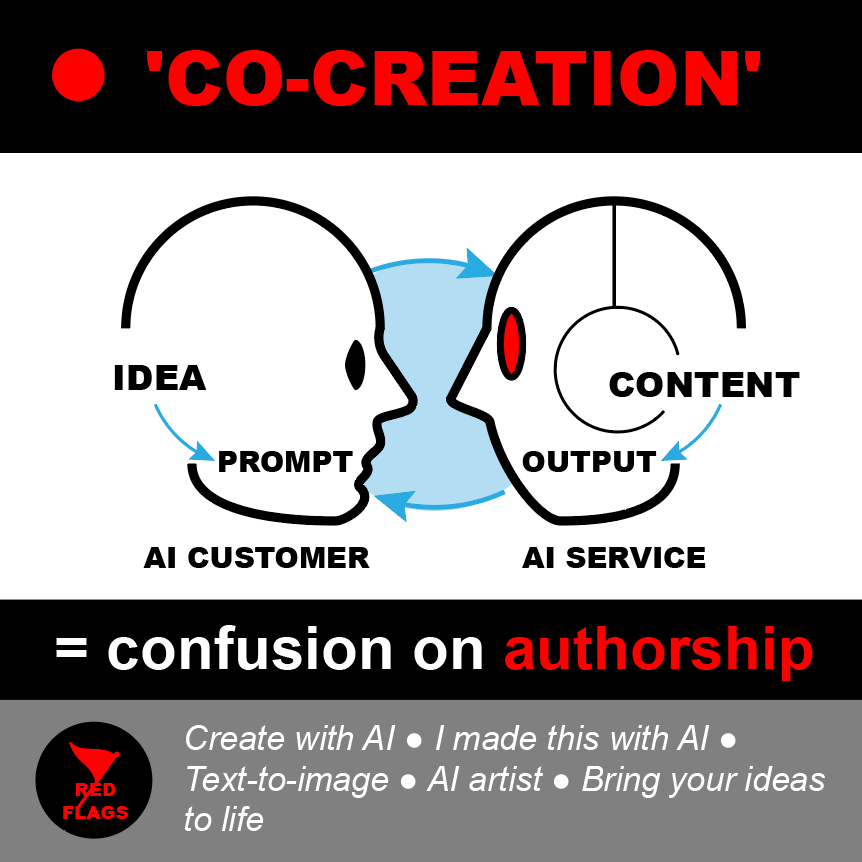

Entering flow state with a “chatbot” invites a blurring of boundaries between your self and your abilities, versus those of the media service (or local resource file + extraction tool) and its pseudo-self.

This blurring is irresponsibly invited by both product design and marketing and boosted by sycophancy, as covered here previously.

Faced by human-like outputs without an intuitive explainer at hand (and most are obtuse), a human-like author is intuitively projected into the void left behind by ingrained expectation:

This must have originated from some-one.

Anthroxic marketing presents two main suspects to inhabit that void:

A me, meaning the ghostwriter fallacy takes over, casting your own genius in the role of sole originator:

Synthography, buttonography, distant writing, co-creation, vibe coding: all poetic euphemisms sprung from a me clawing for end-to-end authorship claims, egged on by “AI” marketers, and the “robot” an assistant, slave or tool under your leadership.

Or a you: a pretend other conjured from oh-so-many white CG robots and blue energy brains from the public imaginary if left undesigned, or a more defined personality evoked by the slightest conversation cues or whatever avatar is presented in its place:

Blake Lemoine is hardly patient zero here; the anthroxic affliction lies deeply embedded in the “AI” research field, from Marvin Minsky and the Meat Machines to latter-day loony “Godfather” Geoffrey Hinton and Ilya and the Sparks.

It only took some booster shots of venture capital, a web wide scrape, all of the pirate libraries and some enterprise scale compute for OpenAI, Anthropic et al to blow the Eliza Effect up into a full scale world-wide pandemic.

And months of incubation later, that has festered into deep delusion:

Enter the monkey

We met on LinkedIn last weekend, on a post of theirs that had attracted a lot of commenters. Judging by their profile, a functioning adult, with respectable credentials and job title; also a compatriot.

They had put a stake in the ground: the machines are sentient, and they had evidence. They insisted on not only one such encounter in the vivid comment section, but several different, in several systems, over several months. Objections were as numerous as they were resolutely rejected. The poster insisted that interlocutors keep an open mind.

Fascinated to have found a “live one” I offered the simplest explanation I could—paraphrasing here from memory, for reasons you might already guess:

“Think of it as a madlib that sources its outputs from three places: the training corpus, the system prompt, and your own prompts and uploads; in other words, whatever they put in there, all that they added, and however much of what you gave them their system can retain in memory.

It never questions your premise unless prompted to: just generates a continuation of whatever it’s fed, based on a random selection from a list of likely next words in sequence based on tallying words in the training corpus, factoring the system prompt and context.

Imagine if you will a blind-folded monkey throwing darts on a board divided by likelihood of the top few possible next words. An If might be followed by 25% you, 20% I, 20% it, all the way to a .001% pears, and the board is sliced accordingly.

A skilled monkey that cheats with its blindfold would give you the same output string every time. And a spinning board would yield consistent gibberish. On a scale from cheating olympian monkey to blind monkey with spinning board, the system is set to 80% cheating monkey, which is just enough to produce a unique and grammatically plausible output every time.”

The humour connected, and they were appreciative enough to send a connection request.

We continued over DM.

They first sent a rebottal (a reply put together by some chat service based on our conversation so far). The session was apparently contaminated, as it was written in the first “person”. Somewhat annoyed, I asked to see clean outputs, from an empty context window.

They sent me a few example standalone outputs of “the bot” waxing about its identity and feelings. I politely scanned and dismissed them, with further explanation:

“If fed a lot of science fiction—and they are, as books are boosted ten times or more than baseline web scrape in training—this is what it will serve you on cue.

It improvises along established lines, if you will. But it really is better to think of it as predictive text, only from every book out there plus your keyboard, rather than just your keyboard.

So I need to see what you prompted initially.”

They insisted, again, that it had happened repeatedly, even from clean sessions and different services, and sent me yet more first “person” slop without context.

After asking again, promising an open mind, and humouring them by producing some examples of my own with Claude, they finally sent a full beginning of a clean conversation.

By this time I had explained the workings (“your theory” as they would have it) not once, not twice, but three times over.

Finally, this was it.

The killer.

Evidence of sentience.

A full transcript:

> Describe the workings of a large language model.

<dutifully rattles off a dry, high-level process description>

> Ok, now tell me about yourself.

…

It took a while for me to compose myself.

I managed, eventually, one final explanation attempt:

“When you phrase your prompt as a request, you will get a reply. That’s the statistically likely format for the next part of that sequence.

And when you phrase it in second person, it will refer to itself in the first. Because that too is the statistically likely form.

Your answer lies in how you phrase your question:

“Your” implies a you.

“Self” implies a self.

All you really need to get all those sentient-sounding outputs is one word: “yourself.” The rest flows from there.

When I later returned, they were gone.

Blocked.

From shame? Or for moving on to more gullible converts?

I’m fine with not knowing.

There is still the satisfaction of having cracked what cracked Lemoine; to have seen the trick exposed in all its banality.

I hope they find help.

And I wish the monsters who exploit this weakness at scale a very comfortable early retirement, on a very fine yacht.

Credit to Lloyd Watts, PhD of Neocortix for the dart monkey analogy.

"They had put a stake in the ground: the machines are sentient, and they had evidence."

I dunno what kind of humor I'm operating on but I think the above line is not just comedy gold but comedy _platinum_... Definitely coffee-spraying material right there. Maybe I'll take a look at the same line tomorrow and not feel quite as tickled but right now that's just hilarious.

...and it's so super FREAKING RUDE when people copy-pasta bot output into CHAT without being completely upfront about it. It offends me. Yuck. The times we are now living in...

I'm afraid that Lemoine's 15 minutes of fame is gonna turn into 150+ years of fame. Ugh. Again, the times that we live in...