The Ghostwriter Fallacy

Picking apart the "theft" of AI detractors, and the "tool" that obscures it

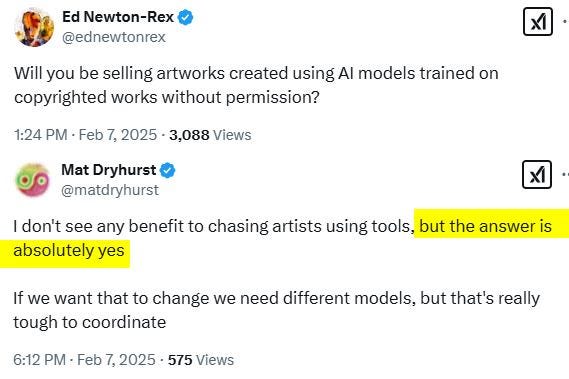

Christie’s “inaugural Augmented Intelligence art auction”, due to start in nine days, is already whipping up a storm—and not the good kind. The open letter of protest above amassed 3,500 signatures in its first 24 hours, and debate rages over at Xitter between, among others, two pivotal figures in the AI art space proper: Ed Newton-Rex of Fairly Trained and Mat Dryhurst of Spawning.ai. Both brits, both A16z affiliated, and both creatives by training working on solutions on the infected issue of consent and licensing.

Oh, and they’re both here on Substack.

I’ll attempt to outline the reasons for the outrage, provide some background, and add some nuance by picking apart two inflammatory words at the core of debate: the “theft” often slung by detractors, and the “tool” often chosen as a means of obscuring it.

Let’s delve.

Endorsing crime

While it might make marketing sense for a few fleeting moments yet to shlep the “AI” sticker on absolutely everything, the actual content shoehorned into the moniker by Christie’s is a very mixed bag—same as the present situation in computer science and elsewhere.

Their curation here follows the same logic as “““AI art”””” museum Dataland: a mix of data art such as Refik Anadol’s mesmerizing animated data visualizations based on hand curated datasets and bespoke software, to tech art stunts such as using an industrial robot as an remote operated painting arm, to what might be called genuine AI Art experiments: deep bleep geekery from before it was uncool, driving cutting edge experimentation at the frontier of math and art.

So far, so good.

But then.

Then we slide over into run-of-the-mill prompt imagery with extra steps, built on the moral morass that is contemporary mainstream genAI services.

In the words of Christie’s:

"using tools like DALL-E, Midjourney and Stable Diffusion, creators collaborate with algorithms or create their own neural networks"

Proudly listing the defendants in the largest IP class action lawsuit in history is a remarkable gamble in itself, and to the critics above apparently THE red flag. We will hopefully find out before long if this is a case of deliberate provocation or legitimisation – or simply one of not knowing better. Because having read Christie’s auction page, I’m still not sure. Although red flags abound.

Regardless, inviting these actors into the space at hand will resonate across and outside of the art world, with PR benefits for the defendants.

Stable Diffusion is worth its own brief aside, as it is in a class of its own here. Two reasons:

One, it is freely available to anyone to tweak and twiddle with, leaving it functionally without guardrails whatsoever, and

Two, it not only infringes on several million rights holders’ exclusive commercial exploitation rights, but model publishers Stability.AI and RunwayML also never bothered to filter out personal information, biometric data and harmful and illegal content when processing their web scrape for the first two major versions (1.4 and 1.5).

This makes SD1.5 the engine of choice not only for some versions of Midjourney and a host of free image generators, but particularly for virtual child pornographers increasingly jailed for life, for nudify apps, and for deepnude blackmailers now being extradited over a spate of teen boy suicides.

CSAM watchdog attention has since prompted RunwayML to hastily retire the model, and LAION to retract and reissue the main dataset it’s based on after volunteers for whatever reason took on their work of removing their illegal content.

But only after dozens of millions of downloads of an asset that in practice guarantees enforcement breaking levels of virtual CSAM for the rest of history.

Yet here it is, featured product in a prestigious fine art auction.

The question for Christie’s is: what signal does this send?

Because to a great many artists, writers and others, it reads as a blaring endorsement of tech companies’ absurd business proposition still being pumped as the inevitable second coming:

Free sampling of everyone’s property for competing commercial use,

with zero accountability for publishing, distributing and producing illegal content.

Conflating commissioning with creation

Also disturbing in Christie’s statement above is the reinforcement of the core delusion that powers ““““AI Art””””: conflating commissioning an image from an AI service –– as is the case with Midjourney and Dall-E –– with creating it.

In simple terms, artificial intelligence art (AI art) is any form of art that has been created or enhanced with AI tools. Many artists use the term ‘collaboration’ when describing their process with AI.

–– Christie’s definition of ““““AI art””””

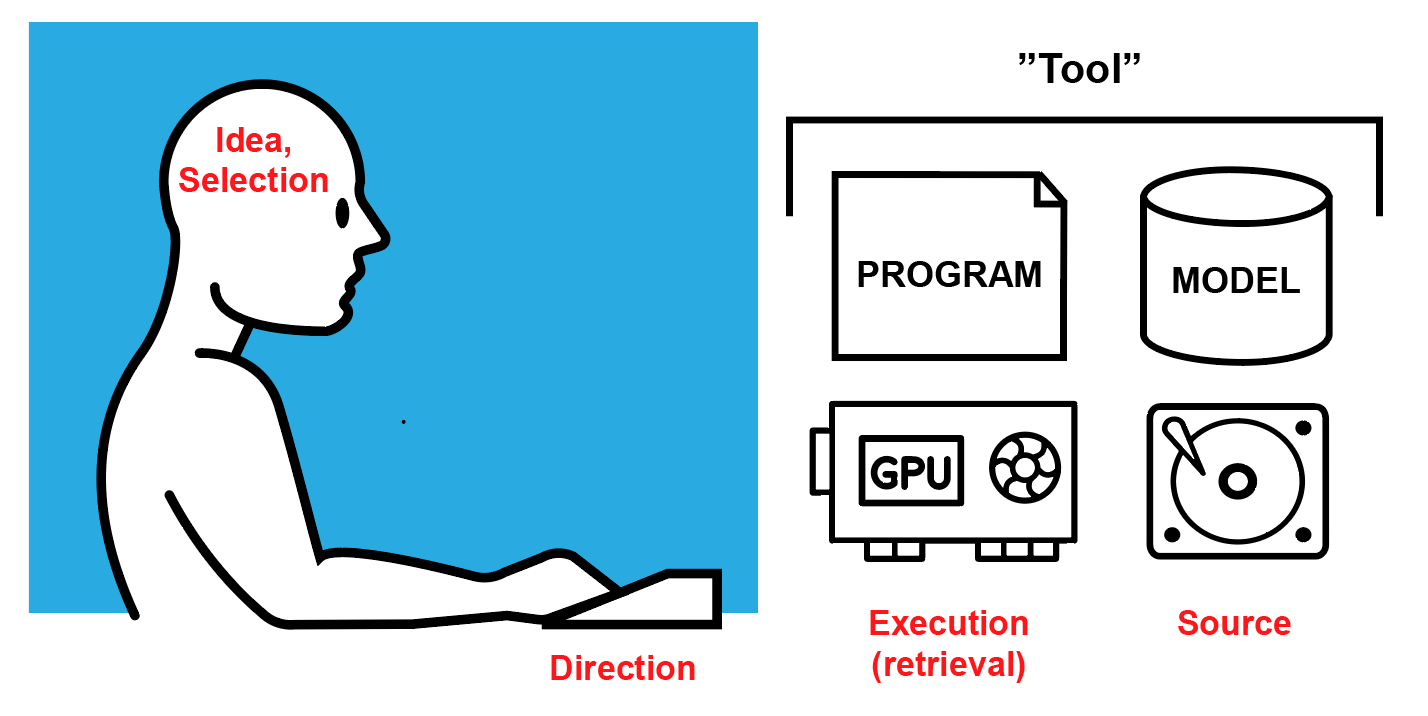

Consistently hammering the “tool” misnomer obscures the vast difference between fully automated synthetic content generation based on other people’s work:

… and personal expression of your own imagination, by your own skills:

It obscures that the overwhelming majority of the value of image generators is expressive content tapped from the underlying works.

And that the overwhelming majority of “creation”, including the bulk of aesthetic and compositional choices, is automated away.

Thus very deliberately kicking the door wide open to the Ghostwriter Fallacy: the vanity trap of feeling entitled to the total outcome of a process, regardless how tiny your own contribution (a pet peeve I’ve covered before) so gleefully and successfully exploited by genAI marketers.

The tool goes on:

While I agree with Mr Dryhurst that effort is generally best spent going after the real culprits rather than their users and customers, he is very aware of the 1,5 billion opt-outs of copyright works ignored by OpenAI et al that his company compiled in the six months following the Stable Diffusion 1.5 release.

Him personally attempting here to profit directly off of these content repositories based on that illicit haul while legitimising the practice, and also magically vanishing the whole issue into the slippery “tool” term, awards him a shining exception to that rule.

Hardly anyone is more keenly aware of exactly what they are doing here.

A16z funds both the “solutions” to online exploitation provided by Spawning, and the accelerants of it, such as model marketplace CivitAI and its paid scrape mobs: a classical protection racket. And by professing to work on “the consent layer of the internet,” Spawning serves to obscure the attempted rights reversal at the core: the consent requirement never went anywhere.

And yet, accelerationism is the order of the day.

To that end, Christie’s Director of Digital Art appears to just parrot the same unreflected techno determinist dogma as all other passengers on the AI hype train, collapsing all potential futures into The One Way:

‘AI technology is undoubtedly the future, and its connection to creativity will become increasingly important’

Thus baking together two self evident truths:

technology can’t be uninvented

information can’t be unshared

with a wildly faulty implied conclusion:

We must accept and adopt the technology as presented to us, bundled together with whatever content is already out there, regardless what harms it creates, and regardless of what fundamental rights and legal principles were violated in making it all happen.

When really we should be asking:

From what sources? At what cost? To the benefit of whom, at the expense of whom, and with whom held accountable?

Some of the works here do ask relevant questions.

But never those big ones.

They know better than to disturb the comfortable, and than biting the hand that feeds.

The koolaid has been well and truly chugged, and all integrity thrown out the window.

Seven flavors of AI theft

Now to the credit of the artists featured in the auction, none of them look to be garden variety prompters.

As seen in the USCO requests for comments on copyright and AI, and frequently in online ““““AI art”””” debate, prompters stubbornly claim legal recognition as authors of outputs composed for them: for work they didn’t do, with skills they don’t have, from property they didn’t pay for, by artists who protest.

And even though the USCO and courts across the world have repeatedly emphasized that only human contribution merits legal protection, not least in the recent USCO guidance on copyright and AI, this is being happily misreported as a win by the all-in crowd.

But there is clearly more at play here.

Let’s tease apart some of the layers, with inspiration from May 2024 paper AI Art is Theft: Labour, Extraction, and Exploitation by Trystan S. Goetze of Cornell University.

1. Stolen Agency

Digging through training dataset explorer Have I Been Trained (by, yes, Spawning.ai) is a trip down memory lane as well as a safari for dirty data. What quickly becomes apparent is that if you ever reached some degree of recognition, copies of your works appear online in all sorts of places, by none of your involvement, and not necessarily tagged in any way that allows you to find them and enforce your rights to them. This is what makes the UK proposal to upend copyright and flip the onus onto rights holders to opt out of AI training so onerous.

Your work is in all likelihood out there, and thus in there, somewhere, contributing to your replacement. Or it will be, if you ever make anything of value; the definition of a popular work is, people share it.

By granting blanket permission to AI companies to commercially exploit others’ property wherever they find it, we have stopped respecting those authors as an end in themselves, instead debasing them to a means to someone else’s end. And in doing so, we have committed an act of disrespect to all humanity, to borrow Kant via Goetze.

(I address the absurdity of opt-out at length in this previous post.)

Violation of consent is the original sin of generative AI as-is, and it taints everything downstream.

2. Stolen Art

A popular misconception of copyright is to reduce the full basket of rights attached to a work of authorship down to just the right to produce verbatim 1:1 copies. This unfortunate idea is invited by the English language naming the full shebang (“copyright”) from the narrow example (“the right to reproduce the work”) rather than from the basis for the right, as in many other languages: “authorship rights.”

The catchy but hilariously wrong refrain goes “copyright is about copies” and the handiest refutations are sampling in music, and movie adaptations of books.

While all of the featured AI vendors facilitate plagiarism through image-to-image prompting and build in no guardrails whatsoever against it, this is not what has happened here.

And while fishing out mangled remains of most works used in training is possible to some degree, this is an arduous, adversarial process and not really what models are for. And some vendors even provide some flimsy fig leaf guardrails against prompting for specific works and artists.

Again, as far as I can tell, directly tapping specific works by other people, while rampant elsewhere and aided, abetted, encouraged and monetized by the featured product companies, doesn’t seem to be what any of the featured artists go for.

3. Stolen Valor

Probably most infuriating to anyone who studied, trained and sacrificed to build marketable skills and recognition in art, is to see neighbouring professionals such as art directors, marketers and graphic designers, and rank amateurs with no professional training, refashion themselves as “artists” for having taken outsourced labor and acquired skills out of the equation and replaced human commissions with subpar synthetic derivatives.

I’m not familiar enough with any of the involved artists to tell if this happened here. The art world for sure is no stranger to egos inflated to the point of delusion, prompt powered or not, but I will award the ones listed here the benefit of a doubt.

4. Stolen Credit

Besides elevating oneself on robotic stilts to a title others earned by study, training, dedication and professional experience, I already mentioned a lesser form of stolen valor, and this one is rampant: claiming credit for something you didn’t make.

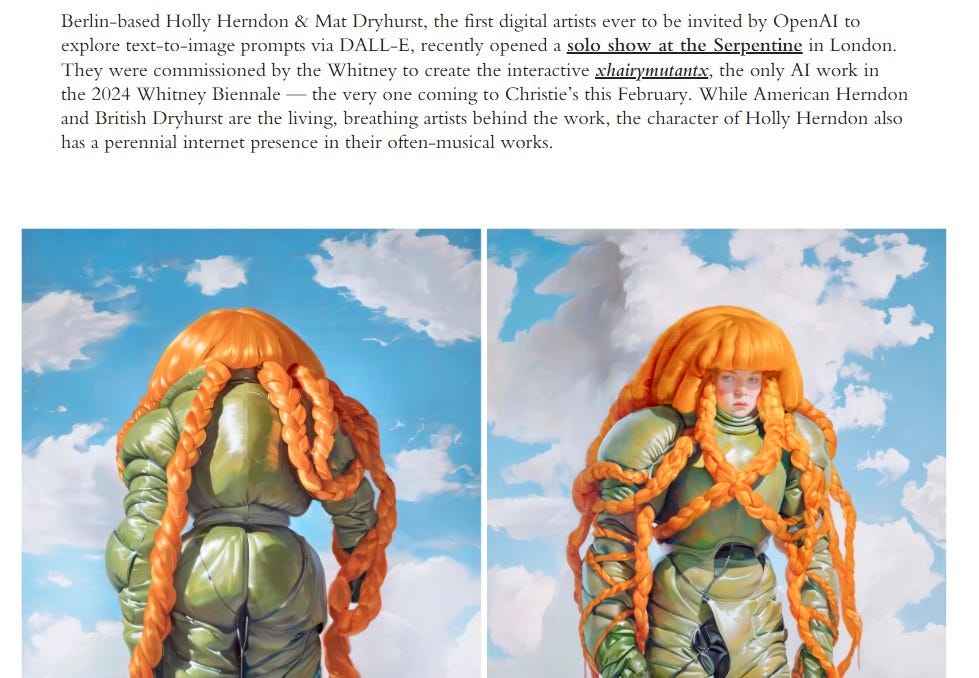

While some of the surrounding process and discussion is interesting enough in its own right, with the extra loops of automation in the case of Keke, and some crowd sourcing and feeding of training data in the case of Hearndon’s xhairymutantx, there is no getting around that what are being sold here are images, and those images were produced by algorithm, not artist. By any right—except in the UK and parts of China—this lands them squarely in the public domain. And them being based on illicitly sourced property only adds insult to injury.

But as Goetze concludes, obfuscation of source is a separate issue; deception, rather than theft outright. The process has been transparently documented enough, even if all of that nuance gets lost in that deceptive “tool” label wielded on the top row.

Using the word “tool” implies full control and contribution in creation.

This in itself constitutes an act of appropriation.

5. Stolen Opportunity

The art market is a competitive space. I’m not going to tear anyone down over breaking new ground and finding success. And I haven’t looked close enough at all the works to be in any position to judge. I’ll just point out that taking shortcuts by stepping on peers, even if indirectly, is not going to win any favors.

It’s bad enough that the slop flood rages on every platform; why invite it here?

6. Stolen Expression

Another sin the listed artists do not commit, but indirectly endorse by way of their chosen “tools.” Style theft is one of the key innovations, and key features, of generative AI. Whole entire sites are dedicated to exploiting it, as are CivitAI competitions and Midjourney Magazine.

A quality previously only replicable by similar skill and hard labour and thus in no need of legal protection, now productized by sampling from across a body of works, hyperautomated, and in dire need of legal clarification.

As long as we manage to scrub our language and thinking from toxic anthropomorphization, I find it likely to be covered by derivatives rights or some variation thereof, although that remains to be tested in court (as far as I’m aware—things do develop quickly).

7. Stolen Paint

Imagine for a moment that every algorithm used here were written by the artists themselves. From scratch, with no inspiration whatsoever from anything that came before. All the math invented on the spot.

Hell, let us even imagine a scenario of them, by superhuman ability, being able to perform each and every matrix multiplication required to synthesize a new image from a prompt by hand, at light speed: Look ma, no GPU!

And let’s imagine the prompt was perfect.

Even then—

The outputs would be nothing without the source material.

And only ever as good as the source material.

Every aesthetic choice, every shade of every pixel, was selected and placed by someone. Even when ground down into the tiniest bits, or more accurately, fused with a thousand other images sampled, there is nothing in the model but latent image content, the code is but a means of extracting it, and a prompt is never more than a set of retrieval coordinates.

Stolen Closing Words

Or rather, borrowed. Again from Mr Goetze, the closing words of his essay, here aimed at those who accelerate, legitimize and profit off of the rights heist of the century:

“New approaches to data collection and processing, as well as enforceable regulations codifying the underlying ethical principles, are needed for large AI model development to be morally permissible.

Until then, these impressive new technologies do not stand on the shoulders of giants; rather, they parasitize their innards.”

He’s being way too kind.