The two Big Lies behind the heist

An illustrated guide to artist market harm and the exploitation ecosystem

Debunking “AI” lies has been a running theme in this blog since the start. My project here is to systematically dismantle key misconceptions and framings.

Some of these run deeper than others.

My previous post, diffusion is compression, placed image models in their lineage of image compression algorithms, and clarified how corporate automated processing of online assets is fundamentally, categorically different from human learning through inspiration and reference.

The aim was to provide grounding against AI Big Lie #1: Learns Like People — from the outlook of this Gen X digital immigrant and visual professional.

It is admittedly reductive to present diffusion (and transformer, transfusion, GAN etc) models as only compression, but grounding it in calculation and computation, separating it from humans, and including the source material in the frame is an essential antidote to toxic humanisation and the backwards frame of products and people sharing any similarities.

We will now look at AI Big Lie #2: You Made This! and how these two together lay the groundwork for the ongoing value transfer and assault on rights.

(I previously covered how this Big Lie exploits the Ghostwriter Fallacy: our tendency to inflate our contribution and take credit for work we didn’t do.)

We will then zoom out to see the value flows of the assets mentioned in the previous post, and outline the general shape of the ecosystem.

This aim here is to inform on market harms to rights holders and creators from degenerative “AI”, which is a key point in Kadrey v Meta and the 40 other US AI/IP lawsuits.

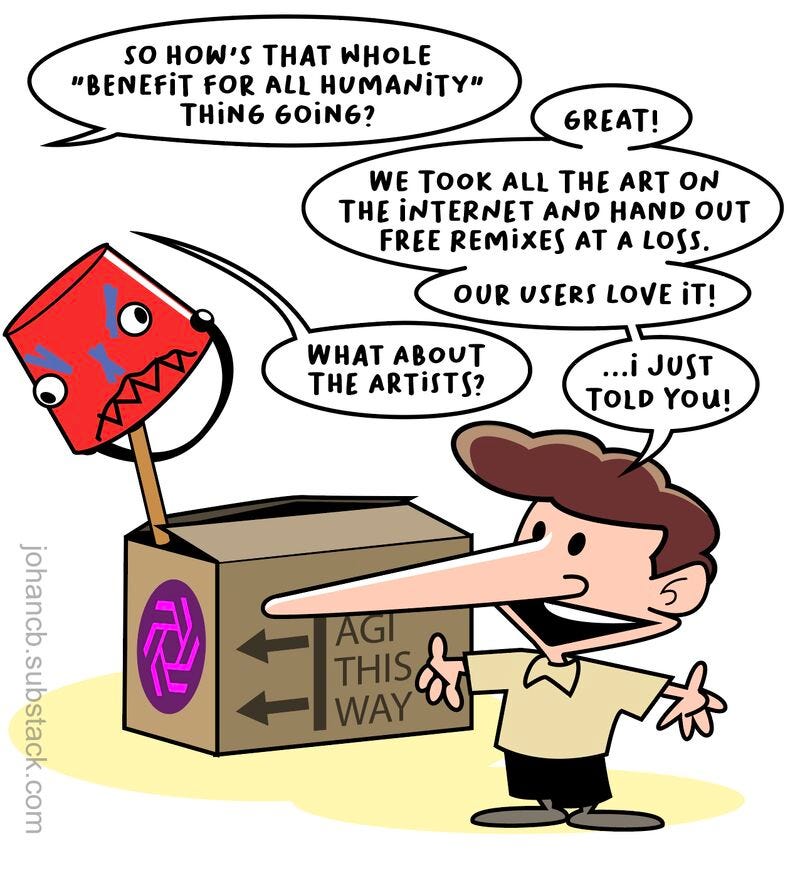

But first: cartoons!

The business model of Question Hound

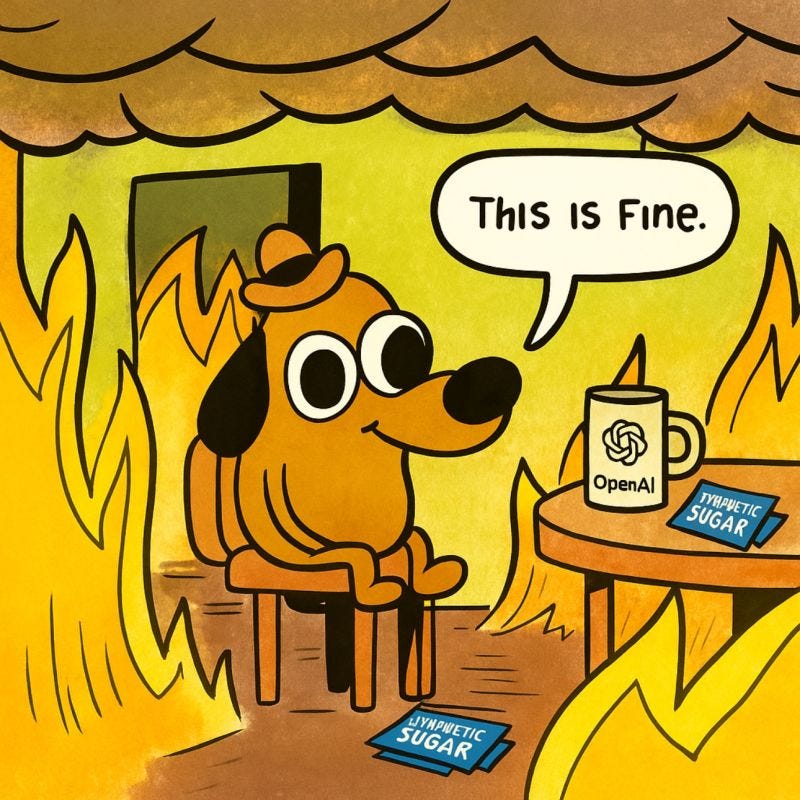

Most people know Question Hound from KC Green’s Gun Show as “This is fine dog.” That’s how excellent hype debunker Denis O. probably prompted for him for his recent writeup about the latest ChatGPT version getting retracted for adding too much synthetic sugar.

As we saw back on Ghibli Day, accelerationists will tell cartoonists that OpenAI providing their memes, characters and styles as prompts to their customers is a boon that helps them stay relevant; that nobody made money online anyway, and that they should be grateful to be part of the future.

Similar demands for gratitude for rape were slung at photographer Jingna Zhang (of Cara.app, Zhang v Google and Zhang v Dieschburg fame) after she protested finding 50,000 Midjourney images in her name.

Never mind the millions of new users attracted to their service, they say. Spoils to the winners! Art hath been democratized.

By this warped logic, the old Internet is dead already. Socials, forums, blogs, web pages and meme sites are gone, or fading into irrelevance so fast that they are practically over.

What the e/acc crowd glance over is, this new paradigm still doesn’t come with a business model. And that’s kind of the point. Disruption for disruption’s sake, technology unbounded by law, norms and second thoughts. A short-termist offshoot of a cyberlibertarian/anarcho capitalist/techno fascist/techno feudalist/TESCREAL ethos yielded this experiment in primitive accumulation and market dumping:

What happens if we centralize all of the world’s IP, including everything ever published and all of the internet, sell it wildly below cost through a randomizing remix bot, and tell people they made what they bought?

While fellating them with a ScarJo voice?

Surely nobody would go for that?

Surely anyone who resists is a small-minded Luddite, looking to stifle innovation by regulation. Can’t they see the pot of UBI at the end of the rainbow?

We are expected to accept that rather than an attempt at establishing a new layer of extractive infrastructure that would forever pull the ladder up for human creators, spearheaded by a service that competes directly against them with infinite instant remixes of their work, generative AI is The Future®, soaring above, untethered from its source material, exempt from input costs and output accountability.

But licensing—striking a deal for permission to commercially exploit—is what enables KC Green to continue making art.

When he entered the scene there was a mature, if shitty, value contract in place for creators on the web: eyeballs for ads, copyright be damned; there are still derivatives rights and adaptation rights. Grind away at the zeitgeist, stick to your vibe, land jokes that connect and if you’re oh so lucky, a viral hit lands you licensing deals for merch and media spinoffs and a stable direct audience. Remix culture and meme culture is part of the package, and to be celebrated. Whereas hustle culture means your stuff will be yoinked for selling ads and t-shirts — but what can you do?

What happens to human art when we transfer derivative and adaptation rights too upwards, to tech monopolies, rather than laterally, at the moment of publication? When instead of peers riffing off of each others jokes and ideas, a corporate Independence Day UFO descends above it all, vacuums it up, sucks it dry, grinds it down and dumps slop bomb mats back onto the commons?

Simple: there will be less human art.

Fewer originals, endless derivatives.

A corporate tax on remixes, and hustle culture supercharged by automation.

(Incidentally, KC wants to put his dog to rest.)

Generative AI companies aim to add a new layer between user and source, all obscure to the general public, to sit quietly in some remote datacenter, chugging water, collecting tax on the internet and extracting rent for datacenter access.

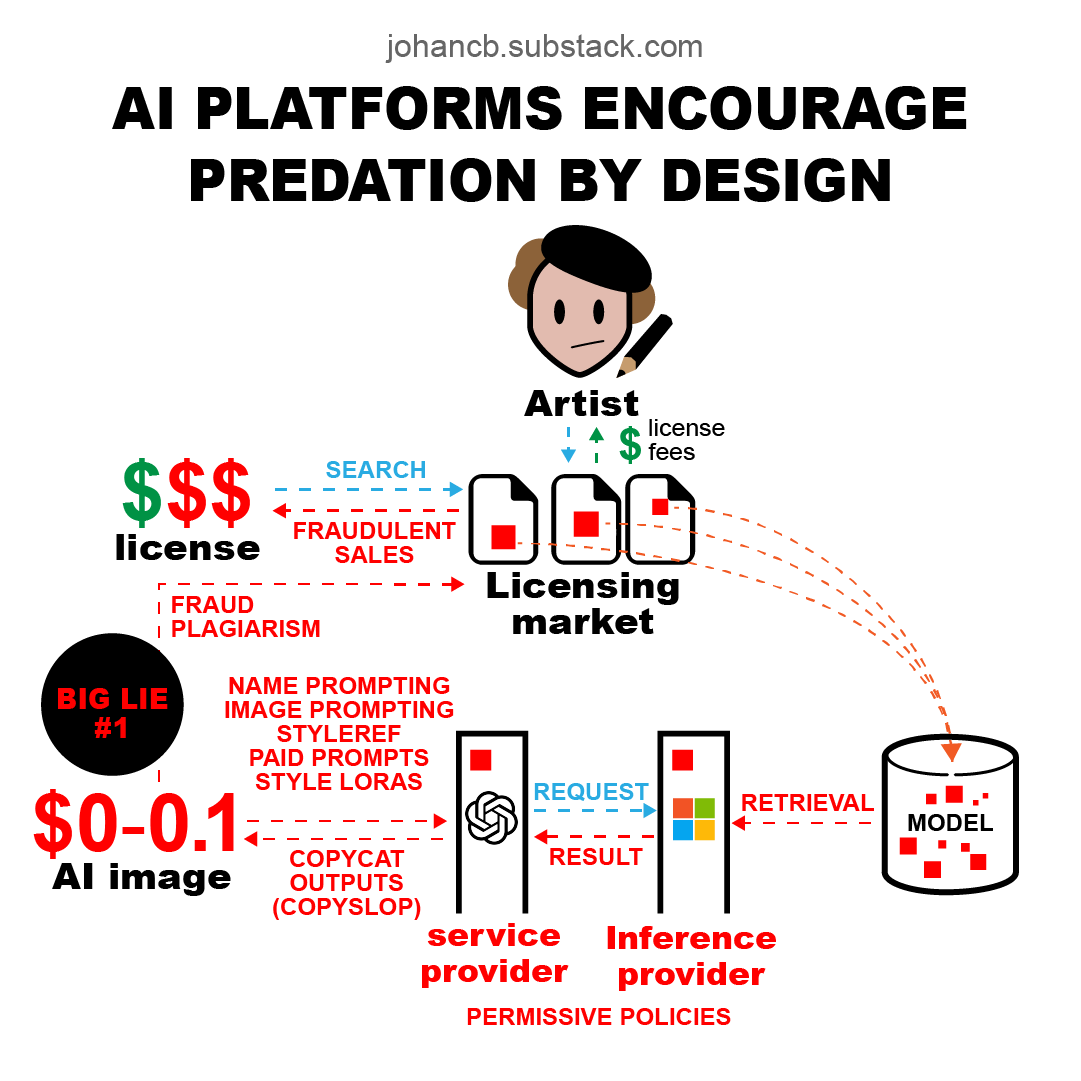

The degenerative “AI” exploitation ecosystem

Someone makes models by sampling a large body of works. Someone runs the extraction script from the model in a datacenter, producing pictures. And someone else yet charges you rent for accessing the service and passes along the requests.

There are more middlemen and value chain actors, but these are the key ones.

The inference provider hosts models and produce all outputs. With a dirty model, they store as well as produce illegal content.

The service provider holds your account and credit card, processes your prompts and filters the outputs. This means they sell the content – only they present it as a subscription, where they charge you for the compute – as credits, buzz, tokens etc – for legal reasons. The content they produce is not covered by copyright. This technically does not prohibit them from selling it, but there is also no legal protection for it; hence insisting on Big Lie #2: you made what you ordered.

And the model maker is the one who scraped you and will keep doing so, along with the top content available to license (not that they did) and the worst bottom dregs of the internet, which they then may or may not have filtered out.

Examples

OpenAI both make models and sell services, but Microsoft run the extraction scripts on their Azure datacenters. Microsoft also provides their own services based on the same models, in both Bing and Windows. So because OpenAI didn’t filter out celebrities and NSFW from the DallE model, and the guardrails in Windows failed, Microsoft made Taylor Swift deepfakes for customers using OpenAI’s model, in their datacenter, with a service they had rolled out to millions of minors.

In the rival Muskiverse, Black Forest makes the Flux image models, xAI runs the extraction, and X sells the Grok service. Which incidentally produces deepfakes, without guardrails, directly to verified

adultsmen. (Notice a pattern?)Stability both make models and sell access to them via their Dream Studio service, but they also offer their models on several different inference platforms such as Microsoft Azure and Replicate.

Collaborator and co-defendant Midjourney make models, based partially on Stability models, and run the service (inference provider unknown).

CivitAI sell the service and host third party models, and OctoML hosted them and ran the extraction scripts until they realized that CivitAI made them a very large producer of virtual CSAM and dropped them like a hot potato – likely after payment processor or watchdog pressures.

Market dumping value flows

It is well known by now that most models were sourced through piracy or illicitly scraped key content from sites with prohibitive terms.

This enables them to sell their services below cost, and to undercut both the licensing and commissions markets by several orders.

This is a predatory strategy called price dumping or market dumping – outlawed as an illegal anti competitive practice in most countries.

How the two AI Big Lies support the assault on your rights

Here’s how it fits together:

Big Lie #1: Learns Like People provides cover for model makers to sample your works for free.

While Big Lie #2: You Made This markets a directly competing service as a “tool” where customers made what they bought, to position end-users as the direct artist competitors, letting their real direct competitors, the service providers themselves, recede into the background.

How Big Lie #1 provides cover for predatory behavior

Service providers offer a range of tools for prompting, transforming and editing images, all for providing value to their customers, with no regard for rights holder interest in what goes into the system at run time, or what went into the model at training time: an entirely one-sided value extraction process, at global population scale.

All eco system actors actively encourage and charge for extracting marketplace value from established artists and popular works. It’s core to their marketing, their value proposition, and their overall attractiveness to users, developers and customers.

Midjourney listed 16,000 artists and photographers, most of whom are active today, for targeted scraping and training and use in their marketing. This has resulted in the largest IP lawsuit in history.

Stability list 3,400 artist names with their model and encourage commercial use despite never having consulted any of them. Their main featured use case is to prompt for new derivatives from those artists. Same lawsuit.

Google do the same with Imagen, which is why they are being sued by Jingna Zhang.

OpenAI went viral by selling Ghibli derivatives to coal barons, IDF soldiers, ICE deportation officers, Trump representatives and many others whom Miyazaki himself would have never dealt with. This attracted millions of new users, and billions in VC funding. Legal action is rumoured to be underway. Also from Moulinsart, over TinTin.

CivitAI held a Halloween scraping contest in 2023, spending thousands of Andreessen VC dollars on dispatching their goons to scour the internet for yet unscraped artists and publish exploitation models of their works.

Market dilution is market obliteration

Publishing original works on the internet now comes with negative returns from the fierce competition by AI powered copycats, and severe popularity penalties: whoever sticks out, will be auto-plagiarized. The USCO aptly coined “market dilution” as a term for it in their latest report. Judge Chhabria in Kadrey v Meta more drastically labeled it “market obliteration.”

And let’s not forget the added popularity penalty for any published artist, of fans sharing your works all over the place as a free windfall for model makers.

Sloop floods marketplaces, depleting royalty pools

And finally, Big Lie #2 provides cover for flooding online marketplaces with slop, again massively undercutting prices and depleting royalty pools. Moderation systems buckle or break under the load, meaning that fraudulently non-AI labeled outputs slip by to sell next to real works, often in the name of prompted artists. Some image markets have a permissive policy towards generated outputs as long as they are labeled. And some even provide indemnification – coverage of legal fees in case buyers get in trouble.

Adobe is the prime example, featuring a stock marketplace filled to a third by slop, and having trained their own Firefly model on pirate model outputs — competing openly against their own contributors, whose works are also being held hostage and used against them, priced unilaterally at below-market rates.

Further reading

Feel free to use these infographics as copy-pasta. That’s what they’re for. AI debate is broken. These will help push back.

I also may collect them at some point — subscribe for updates on that:

The long-awaited part III of USCO guidance on Generative AI training just dropped. The 100+ pages present a nuanced enough view on fair use that it looks to have prompted the Trump administration to fire the head of the copyright office.

For a deep dive on the wider set of ethical issues along the full value chain, a paper called The ethics of AI value chains by Blair Attard-Frost and David Gray Widder was also recently published.

And philosophy paper AI Art Is Theft is another good read, providing a thorough breakdown of different aspect of theft and how it applies to the issues at hand. I covered it in a previous post.

Or hey, why not go dive into the Gun Show comic? KC published the entire archive online.

He put it there for you, you know.

Human to human.

Brilliant stuff, thank you!